Olfaction and its combination with visual stimuli in the creation of interactive and immersive environments, with the potential to enhance personal engagement and well-being - a transdisciplinary collaborative research project integrating science, technology and art.

Lead Researchers and authors: Prof Takamichi Nakamoto, Saya Onai, IIR Laboratory for Future Interdisciplinary Research of Science and Technology, School of Engineering, Tokyo Institute of Technology; Nathan Cohen, Tokyo Institute of Technology WRHI Visiting Professor, Central Saint Martins, University of the Arts London.

Since March 2020 we have been working on an olfactory project investigating how smell, in combination with visual stimuli, influences our impression of an environment and how this could impact Virtual Reality (VR) and Augmented Reality (AR) users when creating immersive, interactive scenes.

Smell is a complex medium to work with, posing challenges for developers, technologists and creatives in its identification, application and handling. Invisible yet, to varying degrees, influential in how we interpret our environment, it has been a source of fascination and inspiration for centuries. Samples of aromatic substances are to be found in the graves of early Egyptians and, in refined forms, it has been a rare and sought-after commodity across cultures through to the present day.

Smell, or more particularly the odours which form it, can be distributed artificially in different ways. Perfume is worn directly on the body or clothing. Aromatic oil or alcohol-based solutions can be sniffed from a simple container or using smelling sticks that absorb the sample. Moved under the nose the aromatic compounds within the odour samples are released into the air and we absorb them through our nasal passages where smell receptors translate the experience to the brain. This requires a simple haptic approach to encountering the smell sample. Alternatives include burning incense, warming essential oils, and the use of aerosol sprays and gel dispensers to disseminate odours more widely within a space.

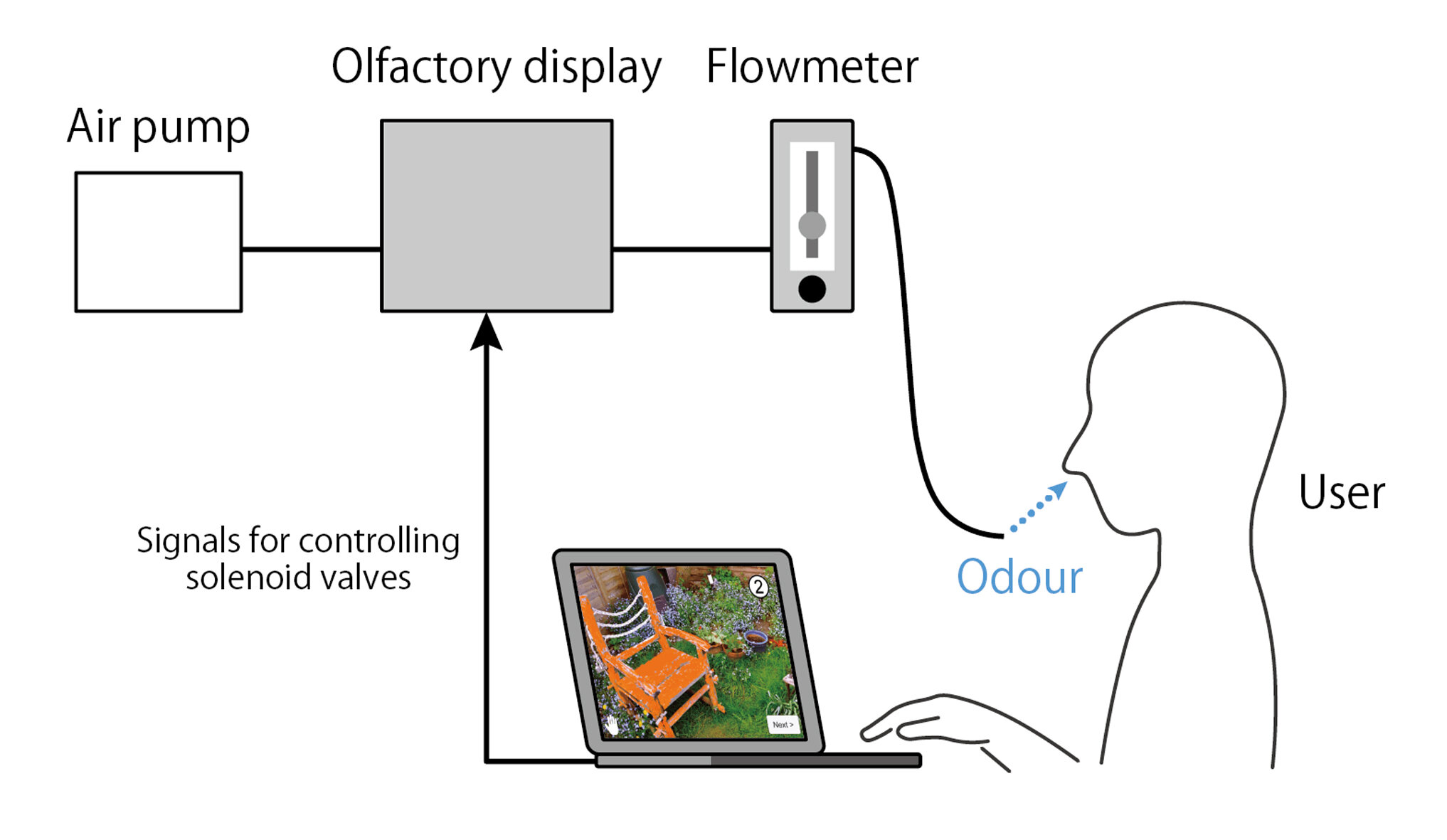

A high-speed solenoid valve open/close olfactory display setup in the Nakamoto Laboratory

Diagram illustrating use of the high-speed solenoid valve open/close olfactory display set up in

the Nakamoto Laboratory.

However, to offer a range of odours in a way that is more intimate and corresponds to other stimuli within a controlled and time-based environment requires a different approach. Over recent years Takamichi Nakamoto has developed, with his team in the Nakamoto Laboratory, a technical and computerised approach to delivering odour samples, either linked to a headset or in close proximity, enabling their combination with visual and auditory media in immersive digital environments. Many technical difficulties had to be overcome in this construction including how to enable several odour samples to be delivered through one device without their contaminating each other, and how these odours could be linked to different aspects of the immersive environment in which they can be encountered over varying durations of time. The device currently being used for our experiments in the creation of interactive virtual spacio-olfactory games is an high-speed solenoid valve open/close olfactory display.

Scentscape (2019, Nathan Cohen, Reiko Kubota) an interactive olfactory artwork with digital display

One area of investigation we are exploring is how smell can encourage memory and enhance narrative association. An earlier example of this may be seen in the boxed artwork Scentscape (2019, Nathan Cohen, Reiko Kubota) where odour samples were presented in small glass screw capped containers which, when handled, triggered sequences of still images on a video screen corresponding to particular places with which the odours are associated. Other objects relating to memories of these places could also be placed within the box, the intention being that the user could combine their own imagery, odour samples and objects to create their own personalised memory box.

The olfactory displays developed by Takamichi Nakamoto offer a more technical and differently immersive approach. In this current research we will be exploring ways in which the combination of imagery and olfaction can create an enhanced narrative experience for the user through the development of scenes that complement and are complemented by odours. This will also take the form of an animated interactive environment that users engage with.

As Saya Onai states in a co-published paper ‘Significant research has already been done in relation to memory and scene recall that can be induced by scent (odours), but information transmission and scene recall by scent alone has not yet been realised effectively. It has been difficult to achieve a common perception of a particular scene from a certain odour due to the influence of prior experience and differences in individual perceptions.’ * In this research, we are seeking ways to enhance scene recollection by combining olfactory, visual, and possibly auditory, stimuli within an immersive environment.

Pair of images displayed sequentially with a 10 second blank screen and odour sample between the first and second versions of the image.

To better understand the links between smell and memory recall a supervised experiment was conceived by the group working on this research and conducted in the Nakamoto Laboratory by Saya Onai in December 2020. 18 volunteers were divided into 2 separate groups. † Group 1 were presented with the first of 2 related images on a monitor screen, the screen then went blank and an odour related to the image was dispensed through the linked headset for 7 seconds with an overall pause of 10 seconds. Following this the screen displayed the second related image. There was a sequence of 11 sets of visual image pairs with corresponding odours, referred to as ‘scenes’, that all the volunteers experienced consecutively. After viewing all 11 scenes a multiple-choice questionnaire was presented asking which odour is the correct one in relation to each scene. For group 1 the questionnaire presented sets of 4 visual images per odour to select from, inviting the participant to identify which image correctly corresponds to the odour for each scene.

Group 1 multiple choice selection for an test odour

Group 2 multiple choice selection for an test odour

Group 2 separately experienced the same test, only the multiple-choice options for selecting the correct odour were text based only and not images.

Initial analysis of the experiment’s results reveal mixed rates of identification, with some odour – image associations stronger than others. We will be exploring this further to identify which odour image combinations are more readily identifiable, together with investigating the temporal nature of odour and odour combination in the creation of animated smell scenes that induce narrative association. We will also be considering the aesthetic aspects of the game design required to engage users and enhance their experience of interaction. This will form the basis for the research during April 2021 – March 2022, with the intention to produce a working prototype for display at Siggraph Asia in December 2021.

*香りによる情景想起の基礎的研究 Fundamental Study of Association of Scenes with Scents Nakamoto, T., Onai, S., Iseki, M., Cohen, N. (2021) IEICE General conference

† This was an initial experiment conducted under Covid-19 pandemic restrictions which meant numbers of participants were restricted.

S T A D H I

This research is supported by the Tokyo Tech World Research Hub Initiative (WRHI), Program of the Institute of Innovative Research (IIR), Tokyo Institute of Technology.

© 2021 Photographs and intellectual content - all rights reserved by the authors.